The Role of the Prompt

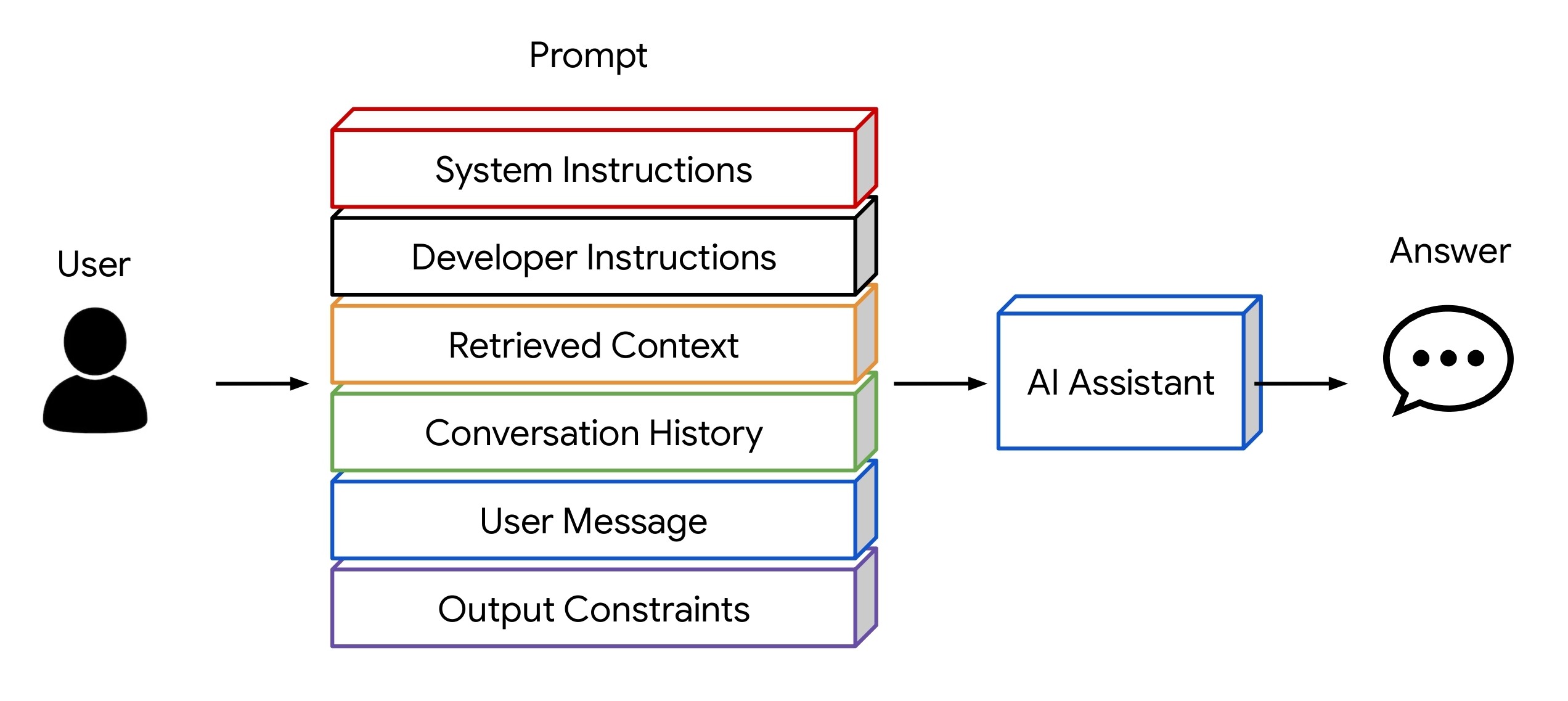

AI assistants rely on structured prompts. The input sent to the language model extends well beyond the user message. Instead, the prompt is composed of multiple components: system instructions, developer instructions, retrieved context, conversation history, user message, and output constraints.

Together, these components affect how the model should behave, which information it can rely on, and how its output should be structured. The user message is only one element in this construction and is embedded within a larger framework defined by the developer.

Among all elements of an AI assistant, the prompt stands out as particularly attractive for optimization. Unlike inference parameters, the system environment, or the model architecture itself, the prompt is easy to access and less expensive to modify. You can read our blog Prompt Optimization Is Mission Critical for more information.

Manual Prompt Engineering

Manual prompt engineering is the most common approach to controlling the behavior of large language models. It relies on hand-crafted instructions written directly by the developer and embedded in the prompt.

Typical techniques include role prompting, explicit constraints, formatting rules, and the use of few-shot examples or step-by-step reasoning instructions. These elements are combined iteratively until the model produces acceptable outputs.

This method requires no additional tooling making it a natural starting point. However, this approach is often time-consuming, sensitive to small changes, and difficult to reproduce or scale reliably.

Automatic Prompt Optimization

Manual prompt tuning does not scale. As prompts grow longer, tasks diversify, or performance must be evaluated systematically, automation becomes necessary. Automatic prompt optimization methods address this challenge by algorithmically generating and refining prompts based on feedback. These methods mainly differ in how prompts are generated, evaluated, and improved over time.

LLM-based Optimization

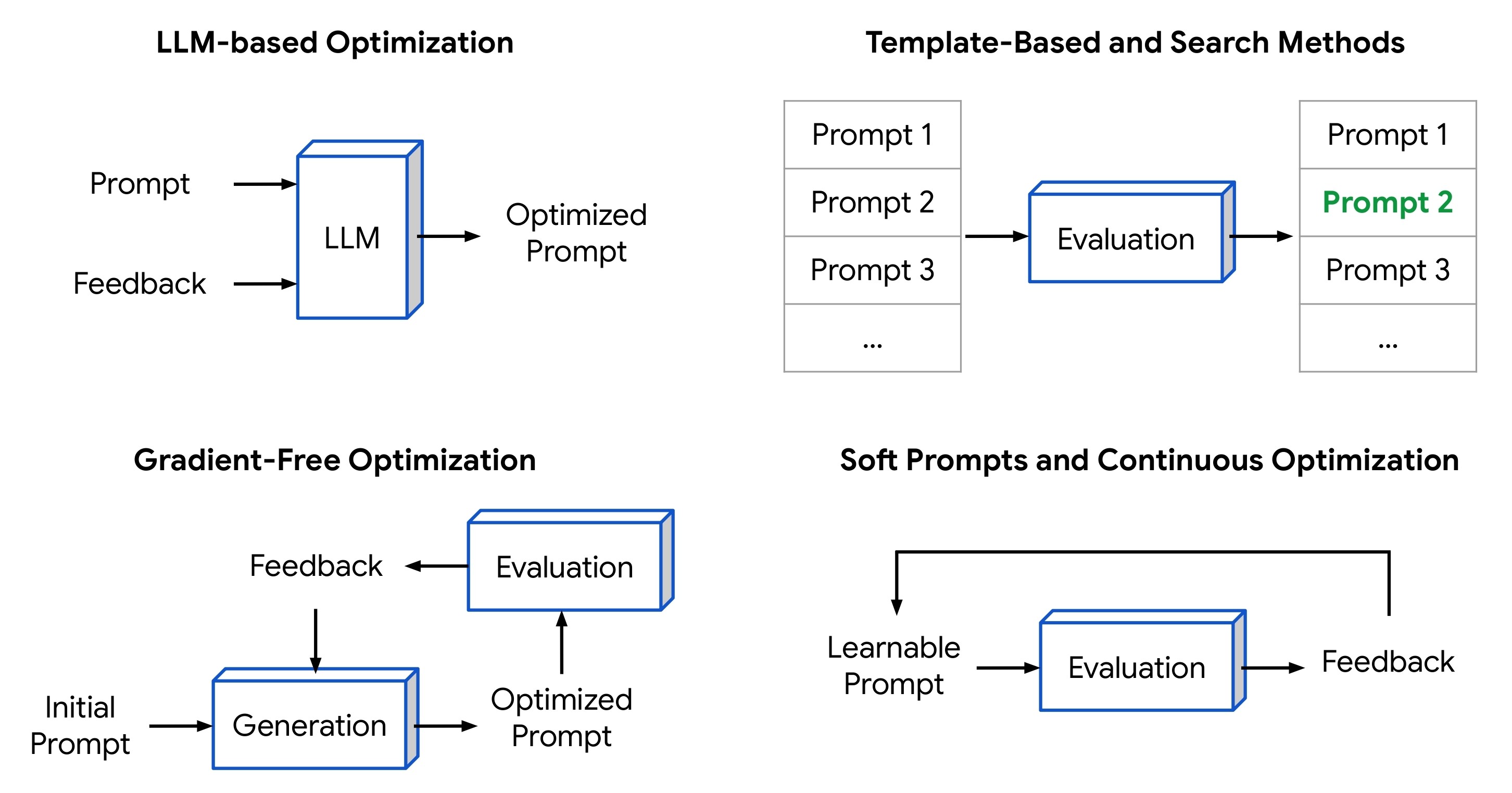

LLM-based Optimization uses a language model to analyze and rewrite prompts. Starting from an initial prompt, the model proposes revised versions based on self-critique or task feedback. Each revision is evaluated using task performance, and the process is repeated until improvements saturate.

In this setting, the language model itself serves as both the generator of new prompts and the mechanism for refinement.

Template-Based and Search Methods

In template-based approaches, prompts are written as parameterized templates with adjustable components, such as instruction wording, constraints, output formats, and few-shot examples. Prompt variants are generated by enumerating or sampling these components, using grid search, random search, or rule-based mutations. Each candidate prompt is then evaluated on a set of examples using a predefined metric, and the best-performing prompt is selected.

Overall, this is a structured search over a predefined prompt space driven by explicit evaluation.

Gradient-Free Optimization

Gradient-free optimization methods treat prompt tuning as an adaptive optimization problem rather than a static search. Instead of evaluating prompt variants independently, these methods use past evaluation scores to update how new prompts are generated. For instance, evolutionary methods update populations through selection and mutation.

As a result, the search process itself improves over time based on accumulated evaluations, rather than relying on a fixed prompt space.

Soft Prompts and Continuous Optimization

Soft prompt methods change the prompt representation itself by replacing discrete text with learnable embeddings. Instead of editing words or instructions, a small set of prompt tokens is prepended to the model and optimized directly in embedding space. Prompt quality is evaluated through a task loss, and gradients are backpropagated to update only the prompt embeddings while keeping the model parameters fixed.

Improvement results from standard gradient-based optimization, enabling stable and efficient tuning at the cost of requiring access to training data.

Evaluation: How Do You Know a Prompt Is Better?

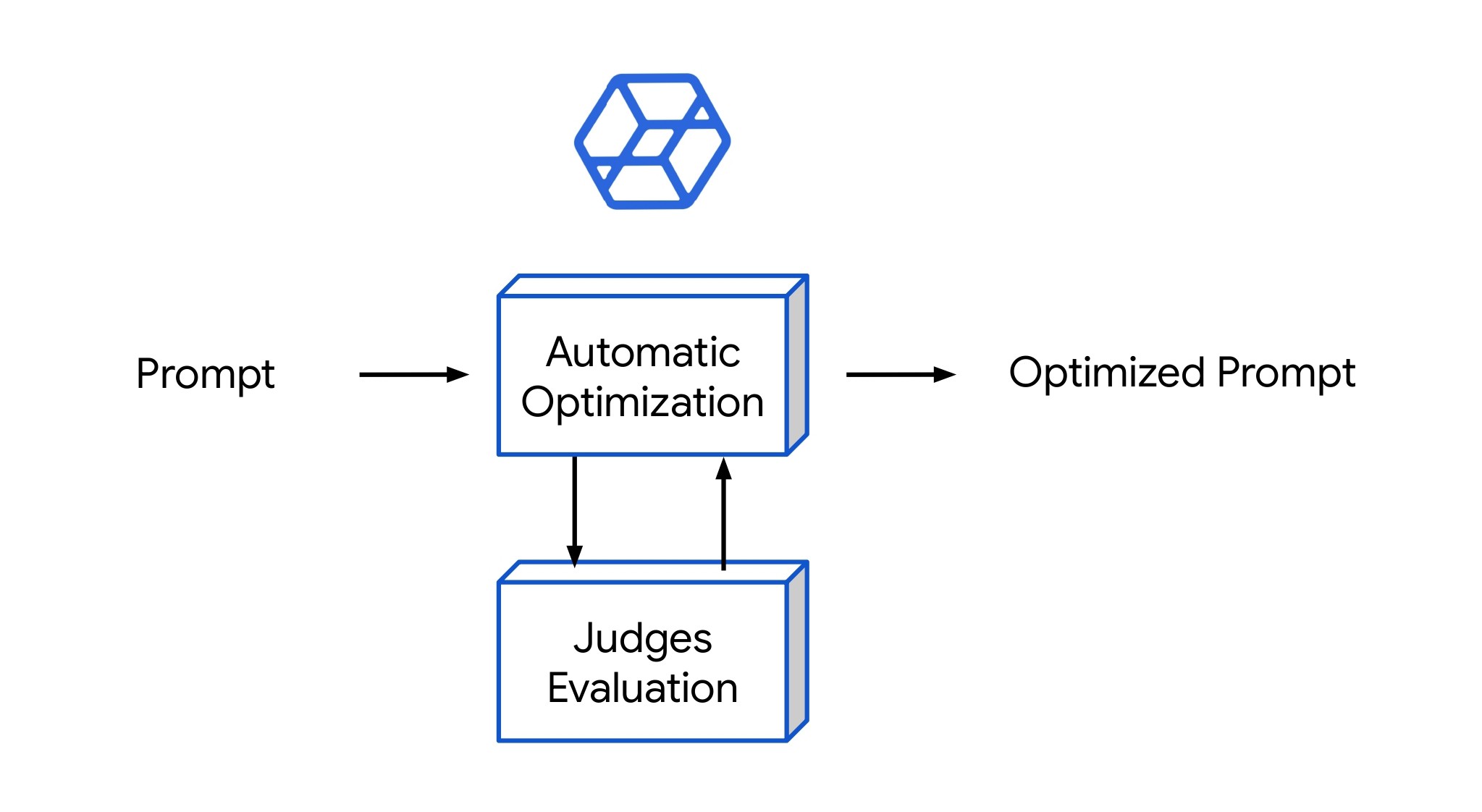

Automatic prompt optimization relies on our ability to evaluate the quality of a prompt. Automatic evaluation methods, i.e. LLM-as-a-judge or verifiable judges, provides scalable feedback that can be used to compare prompt variants and guide optimization without human feedback.

In practice, this requires flexible and reliable evaluation tooling. Mentiora AI enables users to easily create automatic judges from metric specifications and labels. It also supports the comparison of different prompt optimization algorithms, allowing to find high-quality prompts and best tradeoffs between performance and inference cost.

Case Study

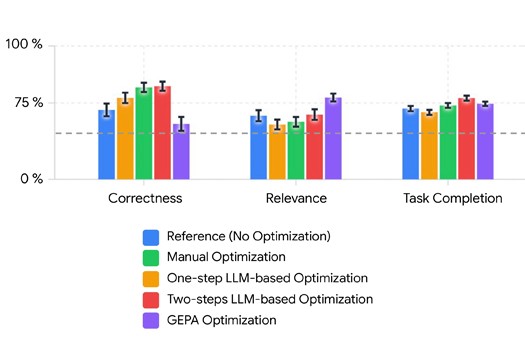

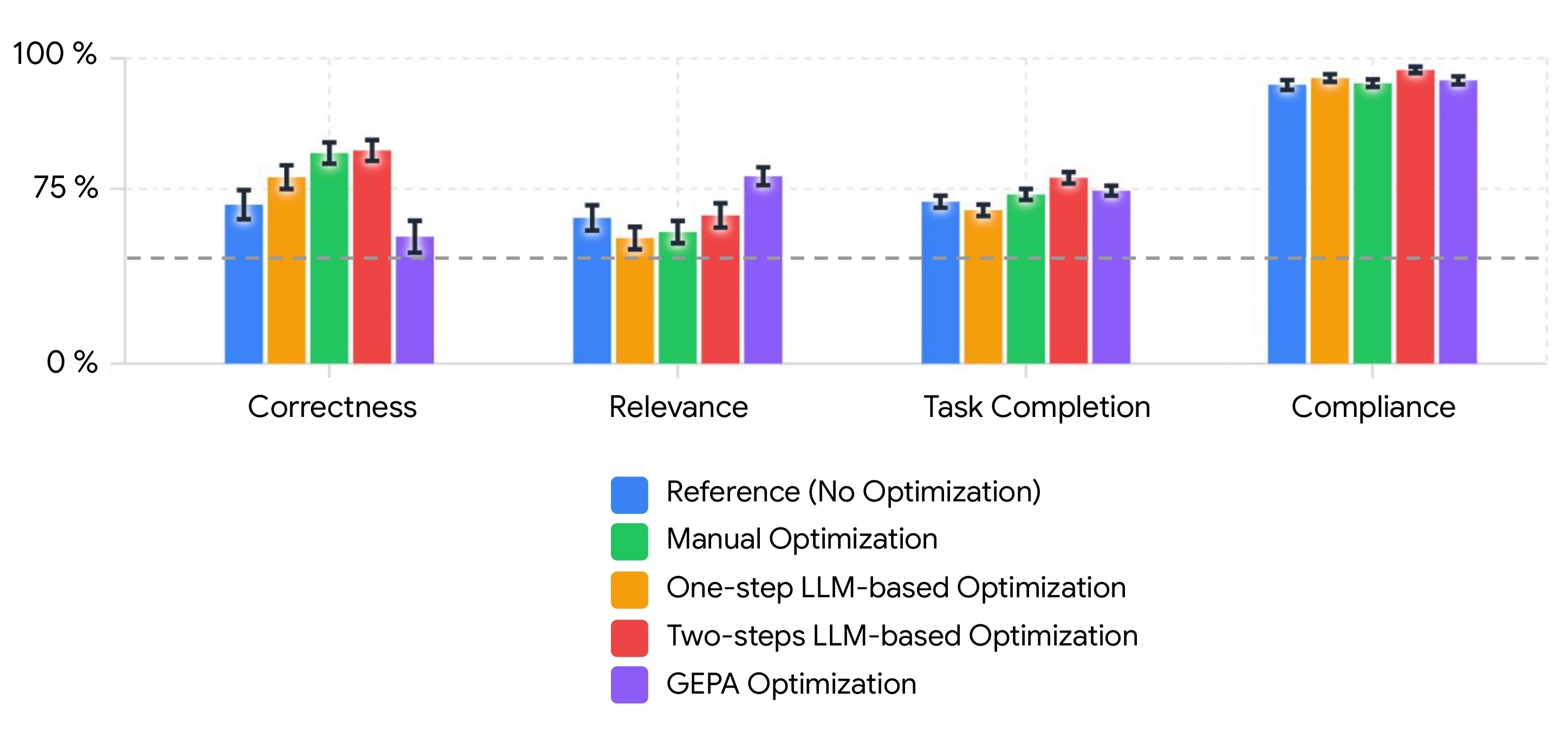

To illustrate these concepts, we use Mentiora AI to compare different prompt optimization algorithms for a retail AI assistant.

Step 1: Define the dataset

We construct a synthetic dataset containing questions that users might realistically ask a retail AI assistant.

Step 2: Define evaluation metrics

We specify a set of specifications to evaluate the AI assistant's performance on 4 metrics: "Correctness", "Relevance", "Task Completion" and "Compliance".

Step 3: Create an initial prompt

We start from a manually written baseline prompt that defines the AI assistant's role and expected behavior.

Step 4: Run prompt optimization algorithms

Mentiora AI can run several prompt optimization methods to improve the initial prompt. Here, we evaluate (1) manual prompt optimization, (2) one-step LLM-based optimization (direct prompt modification), (3) two-steps LLM-based prompt optimization (analysis followed by modification), and (4) GEPA (Agrawal et al., 2025). We compare their resulting prompts using the defined metrics.

Summary

Prompt optimization is a practical and effective lever for improving the performance of AI assistants.

While manual prompt engineering is useful for initial development, automatic methods enable systematic and scalable improvements.

These approaches rely on comprehensive evaluation mechanisms to reliably compare prompt variants.

Mentiora AI provides a unified platform to run and compare multiple prompt optimization methods, helping identify high-quality prompts and the best tradeoffs between performance and inference cost.